CV | Google Scholar | Semantic Scholar | Github | Twitter | LinkedIn | Instagram More about me: Within My Mind?! - Classic Short Stories | Fun/Favorites | Lore Podcast Summaries (help needed!) |

I am a second-year Ph.D. candidate at École Polytechnique Fédérale de Lausanne (EPFL) working

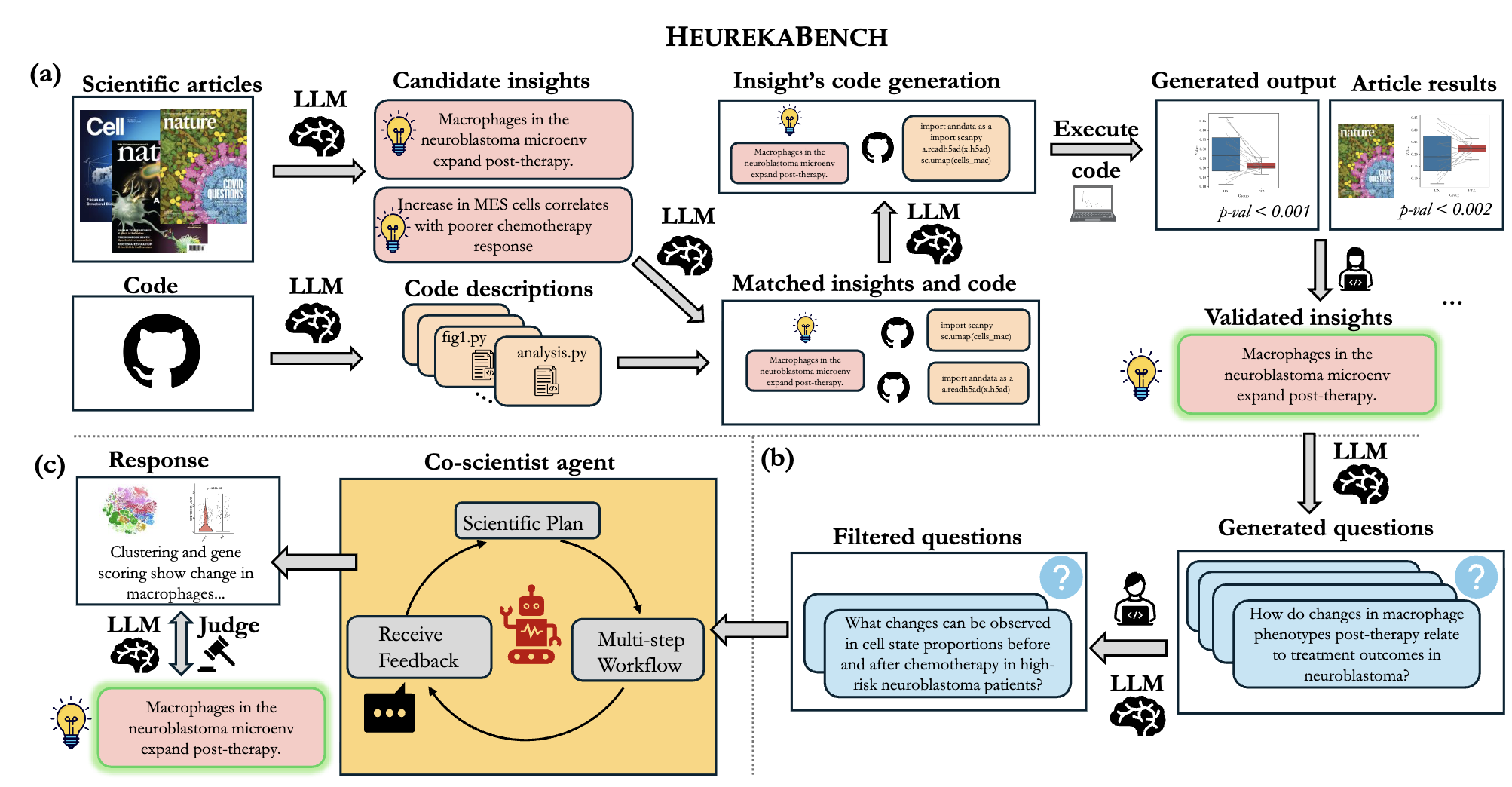

with Prof. Maria Brbic in the MLBio Lab. My research interests span LLM agents, building AI Co-scientists, AI for Scientific Discovery, and geometric deep learning.

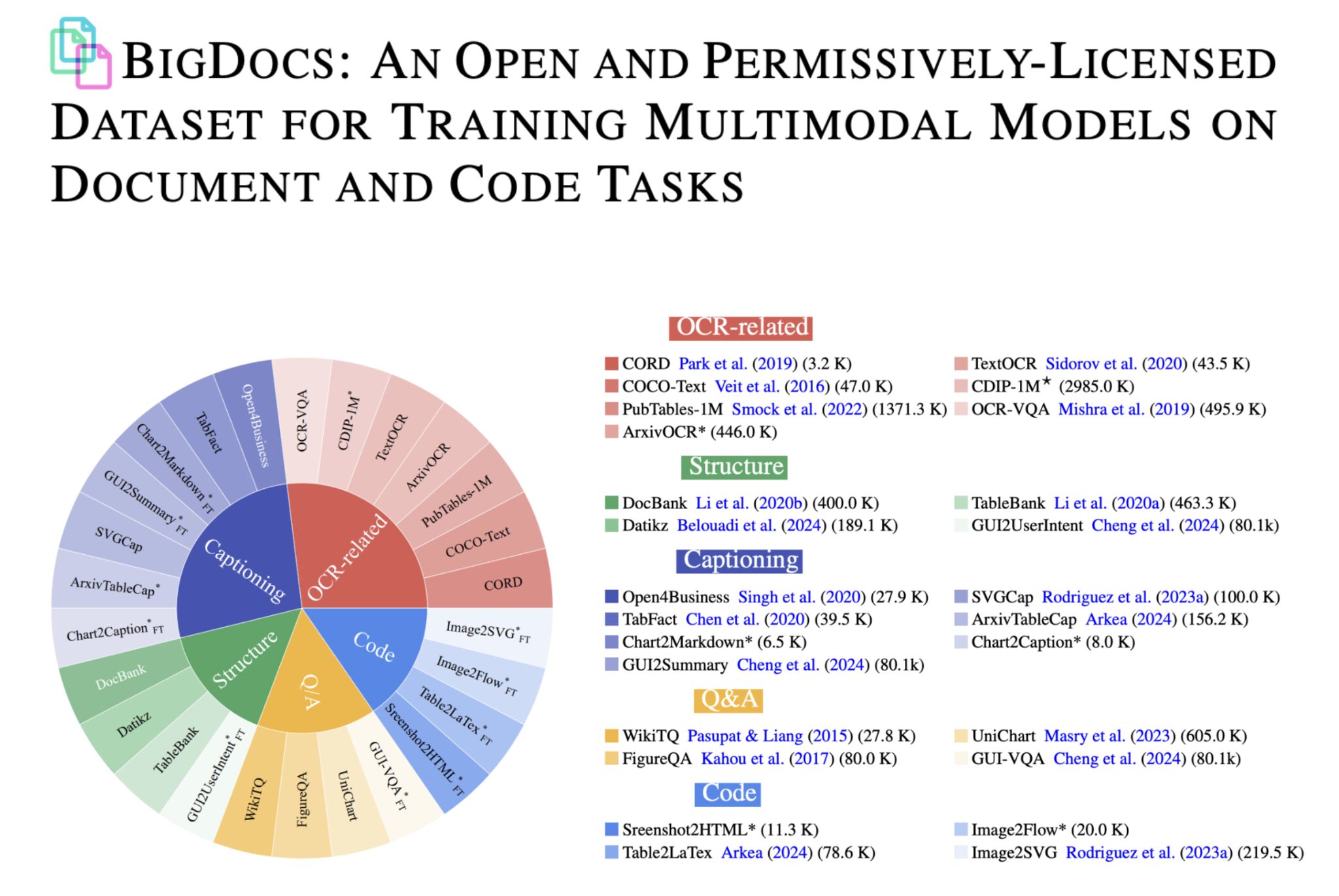

Previously, I was a visiting researcher at ServiceNow Research in the Multimodal Foundation Models team, building foundation model for structured document understanding. I was also a Research Intern at PROSE at Microsoft, working on designing algorithms and evaluation set-ups for email classification in real-world (online) settings. I was fortunate to work on topological data analysis at Adobe Research, India and on explainability in pre-trained language models at INK-Lab, University of Southern California under Prof. Xiang Ren. I completed my M.Sc. in Computer Science from McGill University in a thesis-based program under the supervision of Prof. Siamak Ravanbakhsh. I was also affiliated with Mila during this time. I earned my B. Tech. in Computer Science and Engineering from Indian Institute of Technology Kharagpur. I was part of the CVIR Lab under the supervision of Prof. Abir Das and Dr. Rameswar Panda where I worked on contextual bias and multimodal learning problems. I am always open to collaborations and discussions. Please feel free to schedule a meeting on Calendly. Other: Grad Applications | Singapore 🇸🇬 | Montreal 🇨🇦 | Banff 🇨🇦 |

| |

| [Jan 26] | One paper accepted in ICLR 2026! |

| [Dec 25] | Awarded Swiss AI PhD Fellowship. |

| [Aug 25] | Qualified my Ph.D. candidacy exam, I am a Ph.D. candidate now! |

| [Apr 25] | Accepted to attend Amii Upper Bound 2025. |

| [Jan 25] | Two papers accepted in ICLR 2025! |

| [Dec 24] | Participated in Immunological Genome Project (ImmGen T) consortium at Harvard Medical School. |

| [Dec 24] | Graduated with M.Sc. (Thesis) in Computer Science from McGill University (and Mila)! |

| [Oct 24] | One paper accepted in NeurIPS 2024 Workshop on RBFM. |

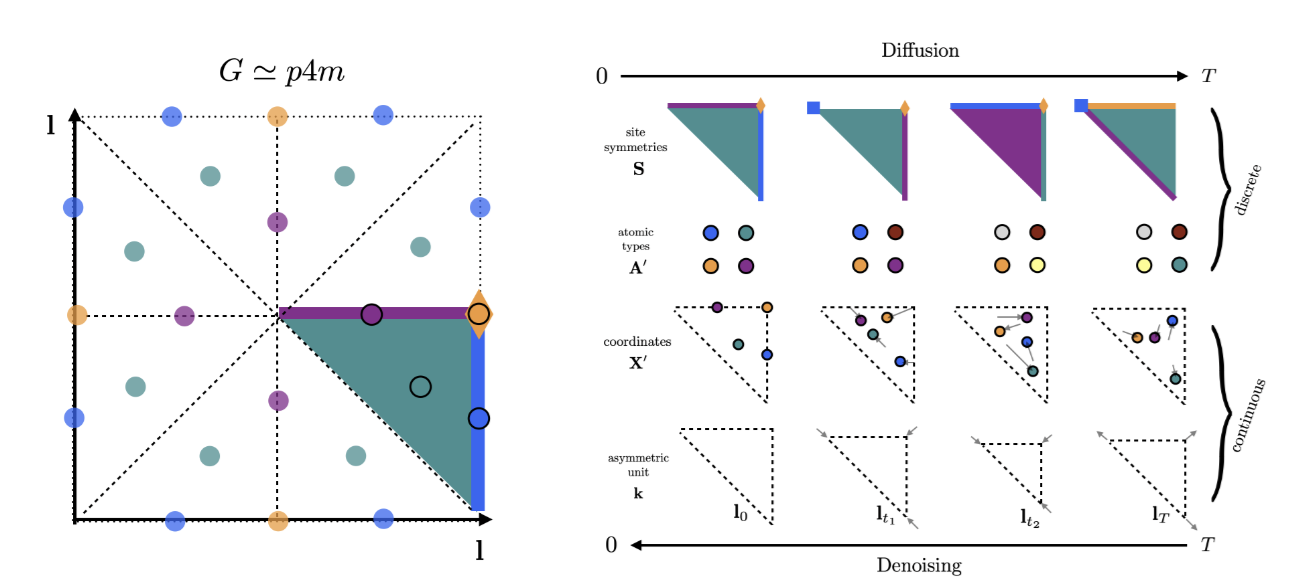

| [Oct 24] | One paper accepted in NeurIPS 2024 AI4Mat workshop with a spotlight talk. |

| [Sep 24] | Received EDIC Fellowship offer to start my Ph.D. at EPFL! |

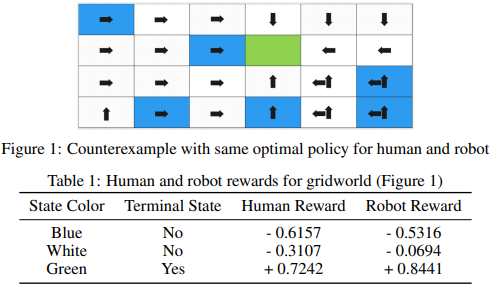

| [Jul 24] | One blogpost accepted in GRaM workshop, ICML 2024! Blogpost here. |

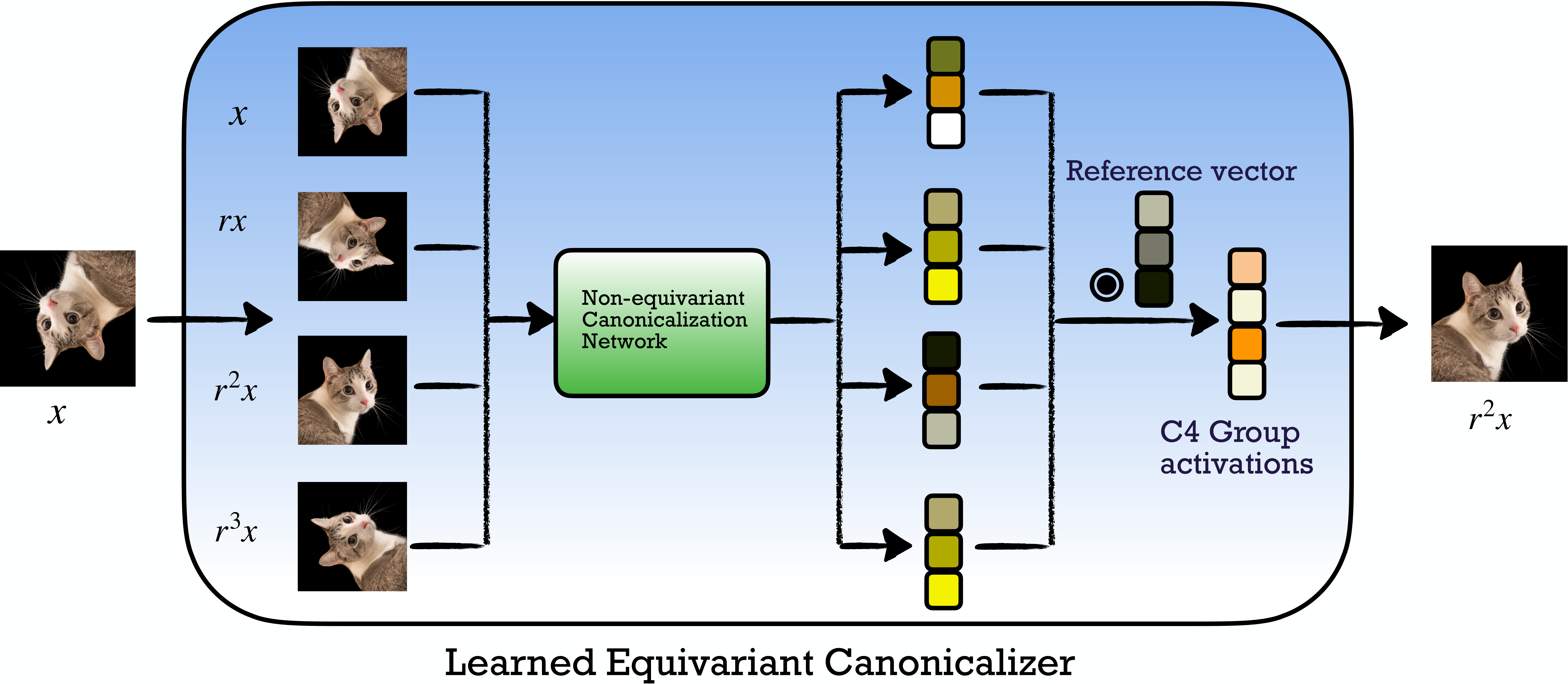

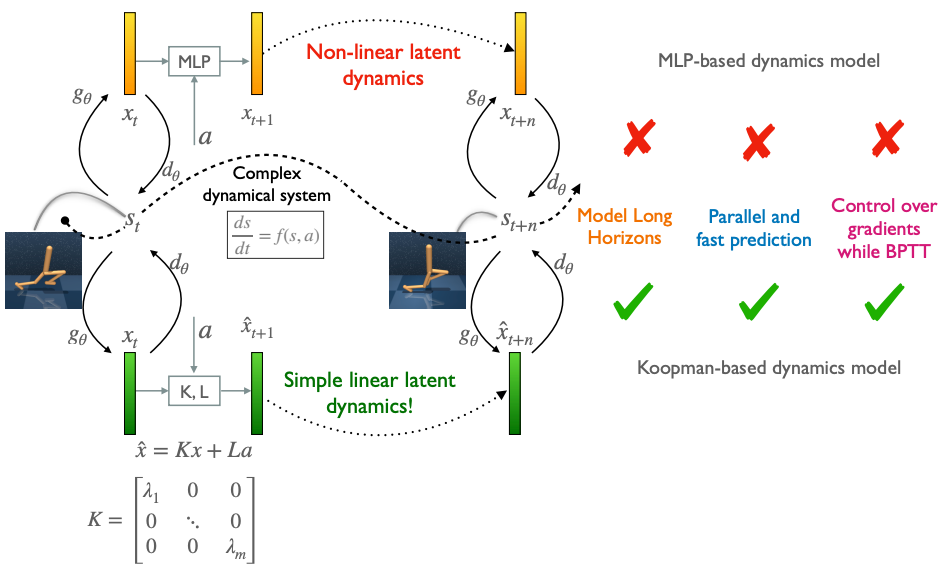

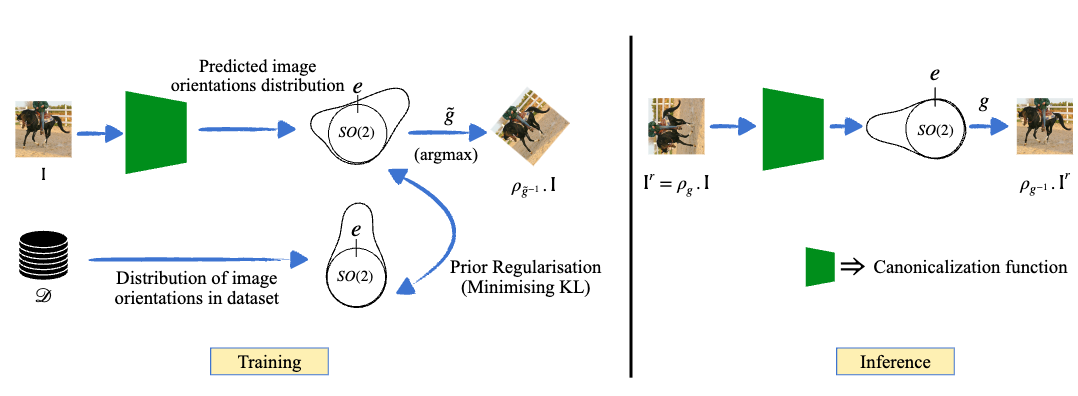

| [May 24] | Two papers accepted in EquiVision workshop, CVPR 2024 with a spotlight talk! Slides here. |

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

|

|

|

Template: this |